Ollama

How to use Ollama on Visual Studio Code

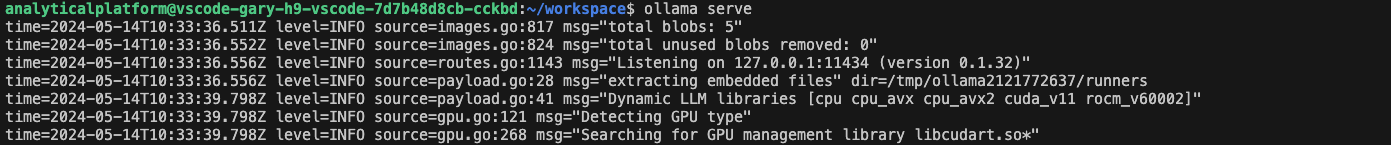

- Start a terminal session and then execute the following command to start Ollama:

ollama serve

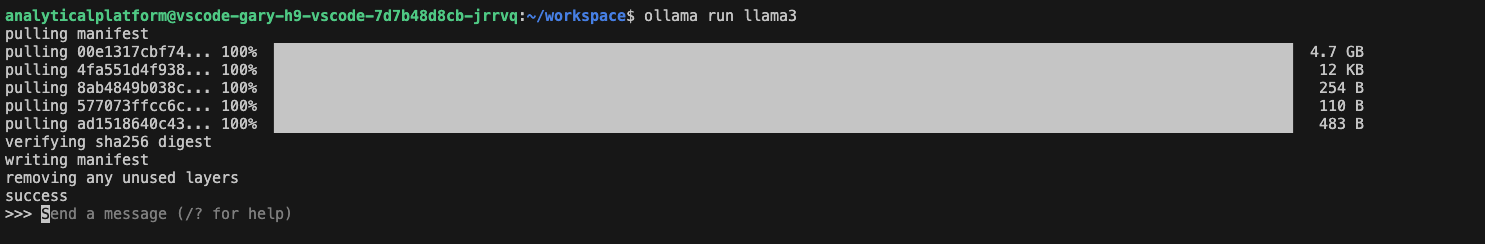

- Start a second terminal session (in Visual Studio Code click the

+symbol at the top right of the terminal) and then execute:

ollama run llama3

The first time you execute a run it will download the model which may take some time. Subsequent runs will be much faster as they do not need to re-download the model. You can view the models which you have downloaded with the ollama list command.

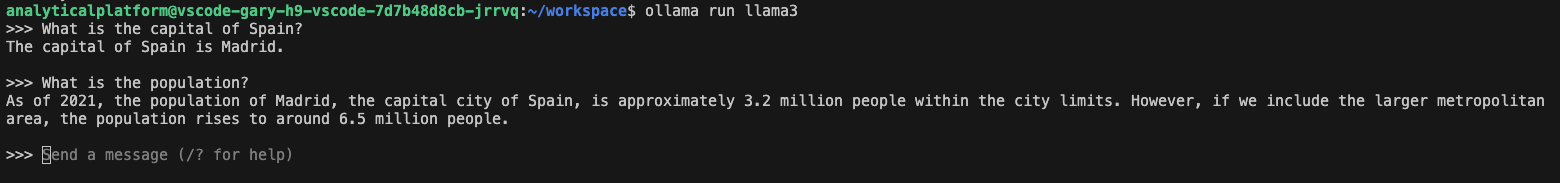

Now you can interact with the model in the terminal in a chat-like fashion as demonstrated below:

To query the model, input text after the >>> characters and hit enter.

This example uses llama3 but other models are available in the Ollama Library.

This page was last reviewed on 1 May 2022.

It needs to be reviewed again on 1 July 2022

by the page owner #analytical-platform-support

.

This page was set to be reviewed before 1 July 2022

by the page owner #analytical-platform-support.

This might mean the content is out of date.